Search Engine Indexing

Purpose

To give administrators control over the behaviour of search engines when the crawl the site.

Details

Search engines (e.g. Google, etc) search the internet for content to include in their index:

- Crawling: visiting a website at its root, analysing the

index.htmlpage and following every link it contains; searching for a sitemap, visiting every URL it contains - Indexing: following a link from a (remote) website, the crawler ends up on a local page (which may or may not be accessible through crawling)

The feature instructs crawlers to either include or not include the data in its index.

A request for GET /robots.txt is responded to dynamically:

- enabled: Disallow access to

/my/transfers/*, disallow/my/drive, the rest is not explicitly disallowed - disabled: Disallow all access

Configuration

- Scope: Configured on Adminunit-level, applies to all Storagehosts of an Adminunit.

- Privileges: Configurable by an Admin

- Default: disabled; search engines are instructed not to store the visited pages in its index or follow links, regardless of the page.

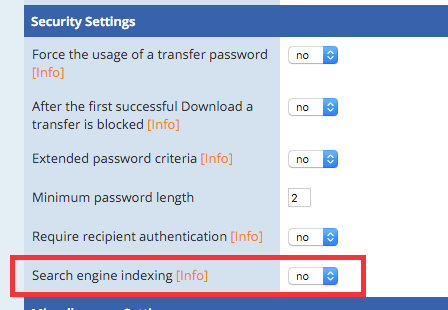

The feature is enabled or disabled in the admin interface:

Dependencies

None

Conflicts

None