Cassandra

The skp-storage service can use Cassandra, a high-available, eventually consistent, distributed database, as its persistence layer. This page describes the concepts and integration approach to achieve this.

Concept

A distributed Cassandra database consists of several nodes (= running instances of the database service), combined in a cluster.

Data is stored in rows in a table. Tables belong to a keyspace, to which access by users and roles is controlled.

Rows are grouped in a partition, which relates to how data is stored on disk and is replicated within the cluster. A keyspace has a replication strategy, which is typically aware of the locality of nodes in terms of racks and datacenters.

Nodes gossip between each other to determine availability, connecting to their listen_address. To ensure objects fulfil their availability constraints, partitions may be replicated to other nodes.

Clients connect to one or more nodes, connecting to their rpc_address.

System architecture and integration

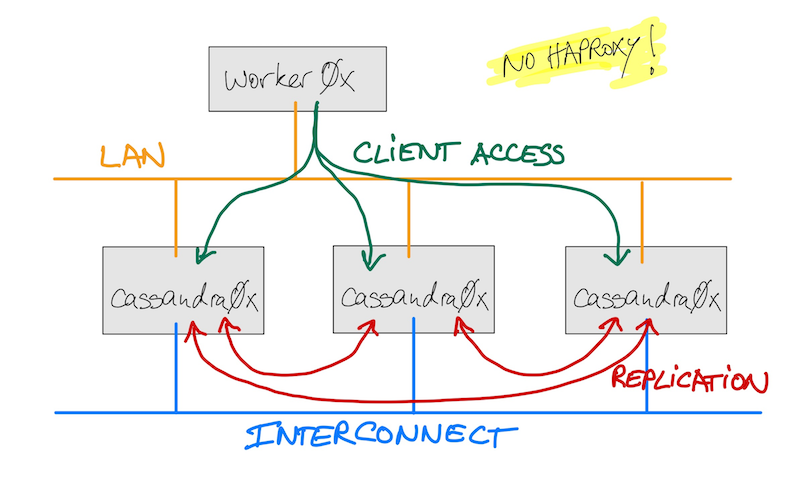

The cassandra nodes require two network interfaces:

- LAN: clients connect to the database to access the data. This should be a trusted network.

- Interconnect: the databases communicate between each other. If this network is not a trusted network, SSL-encryption can be configured.

Clients automatically chose to communicate with the server that provides them with the best service. As a result, use of a loadbalancer between clients and database nodes is discouraged. Since the client application skp-storage does not require to be colocated with the actual data anymore, it can be installed on the worker0x nodes instead.

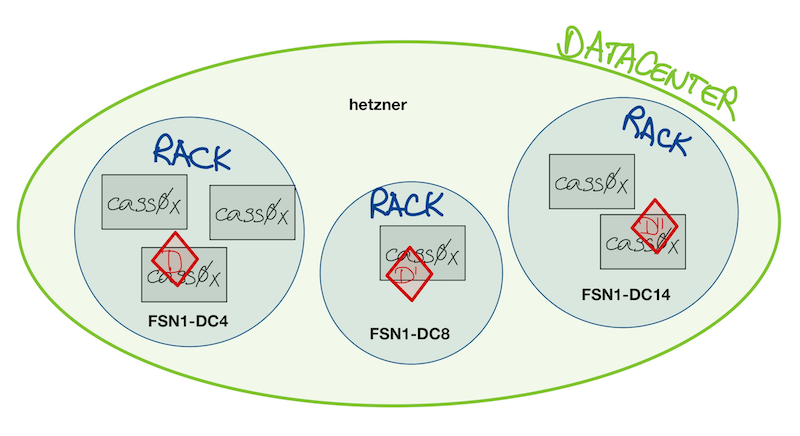

When data is replicated across the cluster to achieve the required availability, cassandra attempts to distribute it across individual availability zones, called racks, per datacenter whenever possible.

Each node must be informed of its location (datacenter and rack), using the cassandra-rackdc.properties file:

dc=hetzner

rack=FSN1-DC4

System requirements

TODO: list system requirements

Local implementation

- cluster name:

C01(test),C02(production) - nodes:

cassandra0x.c0x.skalio.net; a cluster has a minimum of three nodes - replication strategy:

NetworkTopologyStrategy, see NetworkTopologyStrategy; replication factor of3 - datacenter name:

hetzner - rack: Hetzner's definition of a location of each physical machine, example

FSN1-DC4 - snitch:

GossipingPropertyFileSnitch, see GossipingPropertyFileSnitch - keyspace:

skp - user:

skp_user, member of theskp_rolerole - role:

skp_role, has admin privileges on theskpkeyspace listen_address: IP of interface in cassandra-interconnect-VLANrpc_address: IP of interface in LAN

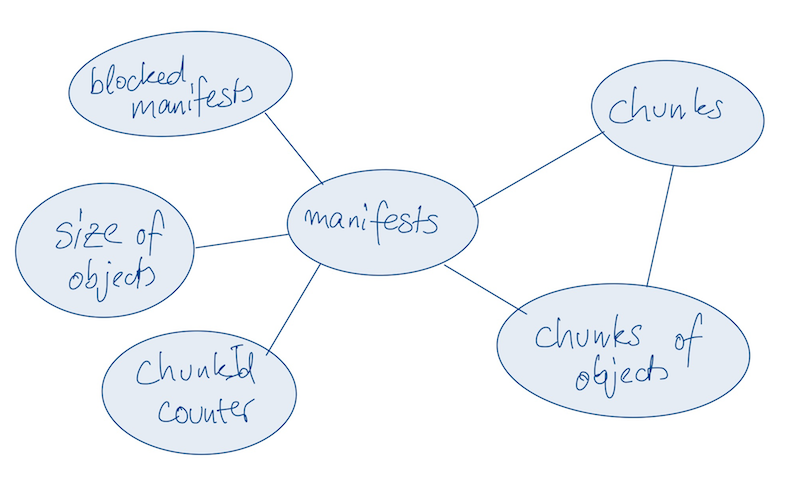

Data model

Cassandra stores data related to an object in multiple tables. Data is denormalized to best support the underlying storage technology.

Table chunks contains the actual payload, split into chunks of up to 1MB size. An explicit partition key is used to not violate maximum partition size recommendations, as well as keep contiguous data in proximity to each other.

Considerations regarding CAP

The skp-storage service uses the following configuration regarding CAP:

- Replication factor: 3. Three replicas of each partition exist; ideally located in separate availability zones.

- Write requests: Consistency Level

ONE. A write request is routed to three virtual nodes in parallel. The write request is blocking until the first node responds. - Read requests: Consistency Level

QUORUM. A read requests is routed to the three virtual nodes that are responsible for the partition. At least two nodes must respond and agree on the data, before it is returned. If the quorum cannot be satisfied, the read request is denied.